Unveiling Transformers in Google Colab

In the ever-evolving landscape of artificial intelligence, the ability to harness complex models like Transformers is paramount. Imagine having the power of these sophisticated algorithms at your fingertips, ready to process and understand human language with unprecedented nuance. This is the promise of Google Colab, a free cloud-based platform that democratizes access to powerful computing resources. Within this digital playground, the installation of Transformers becomes a gateway to exploring the cutting edge of natural language processing.

Setting up Transformers in Google Colab is akin to opening a portal to a universe of possibilities. It's about bridging the gap between theoretical understanding and practical application, allowing anyone with an internet connection to experiment with and build upon these transformative tools. This process, while seemingly technical, is surprisingly straightforward, requiring only a few lines of code to unlock a world of potential.

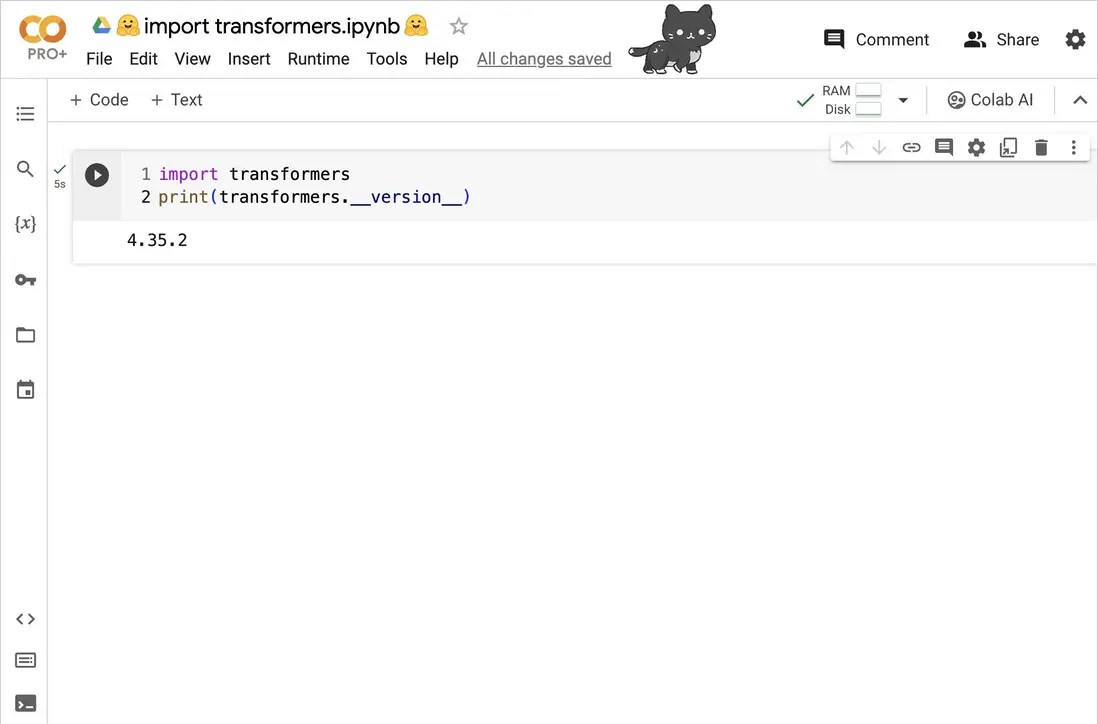

The journey of integrating Transformers within the Colab environment begins with a simple yet powerful command: `!pip install transformers`. This concise instruction initiates a cascade of downloads and installations, meticulously assembling the necessary components. Behind this seemingly simple act lies a sophisticated orchestration of software packages, each playing a crucial role in the seamless functioning of the Transformer model. Think of it as assembling a complex machine, each part essential to its overall operation.

Understanding the significance of this setup requires a brief glimpse into the history of these models. Transformers, a relatively recent innovation in the field of AI, have revolutionized how we approach natural language processing. Their architecture, based on the concept of attention mechanisms, allows them to process information in parallel, significantly improving efficiency and performance compared to traditional sequential models. Installing these models in Colab grants access to this cutting-edge technology, empowering users to leverage its capabilities.

However, the path to harnessing Transformers isn't without its occasional bumps. Compatibility issues, dependency conflicts, and version mismatches can sometimes impede the installation process. Understanding these potential hurdles is crucial for navigating the sometimes-turbulent waters of software integration. Fortunately, the Colab environment, with its robust community and readily available documentation, provides a supportive ecosystem for troubleshooting and overcoming these challenges.

Installing Transformers in Google Colab opens doors to various applications, including sentiment analysis, text generation, and machine translation. One benefit is the free access to GPUs, significantly accelerating training and inference. For instance, training a sentiment analysis model on a large dataset becomes significantly faster with Colab's GPU acceleration. Another advantage is the collaborative nature of Colab, which simplifies sharing notebooks and working together on projects.

Let's outline the installation steps. First, open a new Colab notebook. In a code cell, execute `!pip install transformers`. Next, import the necessary libraries: `from transformers import pipeline`. You can then create a pipeline for a specific task, like sentiment analysis: `classifier = pipeline('sentiment-analysis')`. Finally, test it with a sample text: `result = classifier("This is a positive sentence.")`. This straightforward process allows you to quickly set up and utilize Transformer models.

Advantages and Disadvantages of Installing Transformers in Google Colab

| Advantages | Disadvantages |

|---|---|

| Free GPU access | Internet dependency |

| Easy collaboration | Session timeouts |

| Pre-installed libraries | Limited storage |

Frequently Asked Questions:

1. What is Google Colab? Answer: A free cloud-based platform for machine learning.

2. What are Transformers? Answer: A type of neural network architecture for NLP.

3. Why install Transformers in Colab? Answer: For free GPU access and ease of use.

4. How do I install Transformers? Answer: Use `!pip install transformers`.

5. What are some common issues? Answer: Dependency conflicts, version mismatches.

6. How do I troubleshoot? Answer: Consult documentation and online forums.

7. What can I do with Transformers? Answer: Sentiment analysis, text generation, etc.

8. Are there any limitations? Answer: Yes, session timeouts and limited storage.

In conclusion, installing Transformers in Google Colab represents a pivotal step towards democratizing access to powerful AI tools. The simplicity of the installation process, coupled with the free availability of GPUs, empowers individuals to explore the fascinating world of natural language processing. While occasional challenges may arise, the supportive community and extensive documentation surrounding Colab provide a valuable safety net. By embracing these tools and resources, we can collectively unlock the full potential of Transformers and shape the future of AI. Start exploring today and witness the transformative power of these models firsthand.

Agreeable gray color combinations finding harmonious hues

Decoding your wheels a guide to bolt pattern identification

Exploring unams faculty of law a comprehensive guide

.webp)